Legal and compliance leaders, cybersecurity professionals, and information governance experts must work together to effectively assess and mitigate risks.

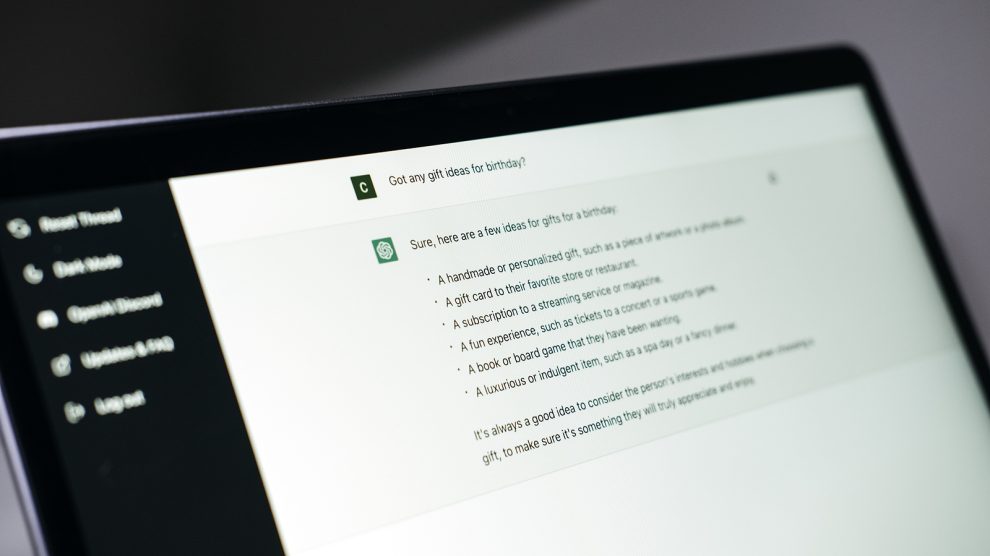

In a world where AI tools like ChatGPT and large language models (LLM) are increasingly being used, Gartner Inc., a renowned research and advisory company, stresses the importance of vigilance.

Ron Friedmann, Senior Director Analyst at Gartner Legal and Compliance Practice, has raised concerns about the susceptibility of outputs from ChatGPT and similar tools to numerous risks. He emphasizes that legal and compliance leaders need to ascertain whether these concerns pose significant threats to their organisation and devise appropriate strategies to manage them, to avert serious legal, reputational, and financial implications.

One risk that Friedmann points out is that ChatGPT and other LLM tools can generate responses that may seem plausible yet incorrect. To counter this, he advises organisations to emphasize the assessment of the accuracy and relevance of ChatGPT outputs before their acceptance.

A further risk lies in data privacy and confidentiality. Information entered into ChatGPT could potentially become part of its training dataset, raising the alarm about the misuse of sensitive, proprietary, or confidential information. To guard against this, Friedmann suggests that legal and compliance departments implement strict compliance frameworks that prohibit the input of sensitive personal or organisational data into public LLM tools.

Despite efforts by OpenAI to limit bias in ChatGPT, there have been known instances of bias, which constitutes another risk. Friedmann urges legal and compliance leaders to stay informed about laws governing AI bias and ensure their guidelines are compliant.

In the realm of intellectual property (IP) and copyright, risks are not absent either. With ChatGPT being trained on extensive internet data, including copyrighted content, its outputs could infringe on copyright or IP protections. Friedmann, therefore, emphasizes the importance of leaders staying updated on copyright law changes that are relevant to ChatGPT’s output.

Given the instances of misuse of ChatGPT by malicious actors for the spread of false information, there is a real threat of cyber fraud. To counter this, Friedmann suggests that legal and compliance leaders work closely with cyber risk managers to keep company cybersecurity personnel well-informed.

Lastly, a failure to disclose the usage of ChatGPT, especially when it functions as a customer support chatbot, can result in consumer protection risks. Businesses might face legal action and lose customer trust. In light of this, Friedmann advises that organisations ensure their usage of ChatGPT complies with all relevant laws and proper disclosures have been made to customers.

Significant implications

The risks highlighted by Gartner in its report, Gartner Identifies Six ChatGPT Risks Legal and Compliance Leaders Must Evaluate, have significant implications for cybersecurity, information governance, and legal discovery professionals.

It brings to light potential vulnerabilities that could be exploited, leading to misinformation, data breaches, or even cyber fraud. Legal and compliance leaders play a crucial role in setting guidelines and controls for the usage of generative AI tools like ChatGPT, ensuring compliance with AI bias laws, copyright and IP laws, and consumer protection laws.

To effectively manage these risks, organisations must prioritise the assessment of ChatGPT outputs for accuracy and relevance. They should implement strict compliance frameworks to protect data privacy and confidentiality, prohibiting the input of sensitive information into public LLM tools. Staying informed about AI bias laws and continuously monitoring for bias in ChatGPT’s outputs is essential. Keeping up with copyright law changes can help mitigate IP and copyright infringement risks.

Collaboration between legal and compliance leaders and cyber risk managers is crucial to addressing the threat of cyber fraud. By working together and keeping cybersecurity personnel well-informed, organisations can strengthen their defenses against malicious actors.

Additionally, organisations must ensure proper disclosure of the usage of ChatGPT, particularly in customer support roles, to avoid consumer protection risks, legal action, and the erosion of customer trust. Compliance with relevant laws and regulations is paramount, and organisations should make transparent disclosures to customers about the involvement of AI tools like ChatGPT in their interactions.

Robust strategies and frameworks

The risks associated with ChatGPT underscore the need for a comprehensive approach to managing AI tools in the corporate environment. Legal and compliance professionals must take a proactive role in assessing and mitigating these risks to protect their organisations from potential legal and reputational harm. They should collaborate closely with cybersecurity experts, information governance specialists, and other stakeholders to develop robust strategies and frameworks.

Cybersecurity professionals play a vital role in safeguarding organisations against the risks posed by ChatGPT. They should be actively involved in the implementation of security measures to protect sensitive data and prevent unauthorised access. Regular audits and monitoring of AI systems are crucial to detect and address any potential vulnerabilities or breaches. By working closely with legal and compliance teams, cybersecurity professionals can ensure that AI systems meet the necessary security standards and are aligned with regulatory requirements.

Information governance professionals are responsible for establishing policies and procedures that govern the collection, storage, and usage of data, including data processed by ChatGPT. They should work in tandem with legal and compliance teams to ensure that data handling practices are compliant with privacy regulations and organisational policies. This includes implementing mechanisms to prevent the input of sensitive or confidential information into public AI tools and developing guidelines for data retention and disposal.

Continuous education and training are essential for all professionals involved in managing ChatGPT and similar AI tools. As the technology evolves and new risks emerge, it is crucial to stay updated on legal and regulatory developments, industry best practices, and emerging technologies. By investing in the professional development of employees and fostering a culture of awareness and accountability, organisations can build a strong defense against potential risks.

Furthermore, organisations should consider engaging with AI vendors and developers to address these risks collaboratively. Open communication and partnership between organisations and AI providers can lead to the development of more secure and reliable AI tools. This includes sharing insights on potential risks, discussing data privacy concerns, and advocating for adopting ethical and responsible AI practices.

The risks identified by Gartner in its report highlight the critical importance of managing ChatGPT and similar AI tools in the corporate environment.

Legal and compliance leaders, cybersecurity professionals, and information governance experts must work together to effectively assess and mitigate these risks. Organisations can harness the benefits of generative AI tools while minimising potential pitfalls by implementing robust frameworks, staying informed about relevant laws and regulations, and fostering collaboration among different departments.

Continuous monitoring, auditing, and improving AI-related systems are imperative to stay ahead of evolving threats and ensure compliance in an increasingly AI-driven world. With a proactive and comprehensive approach, organisations can navigate the complex landscape of AI governance and protect themselves from potential legal, reputational, and financial consequences.

This content has been produced in collaboration with a partner organisation through our Global Visibility Programme. Our programme helps companies boost their digital presence and strengthen the thought leadership of their experts. Find out more here.

The original article is located at Managing ChatGPT in the Corporate Environment? Six Crucial Risk Factors Highlighted by Gartner.

Add Comment